Apple Silicon vs NVIDIA CUDA: AI Comparison 2025, Benchmarks, Advantages and Limitations

Since the launch of the first Apple Silicon M1 processor in 2020, up to the recent M4, Apple has profoundly changed its approach to computing for artificial intelligence. In just a few years, the company moved from architectures similar to market standards to a System on a Chip (SoC) integrating CPU, GPU, Neural Engine, and high-bandwidth unified memory — a true paradigm shift compared to traditional systems.

On the other hand, NVIDIA CUDA, launched in 2006, has remained faithful to its model: dedicated GPU, separate VRAM, and massively parallel computing. This architecture, supported by an exceptionally mature software ecosystem, continues to dominate large-scale model training.

These two approaches today embody two distinct visions:

- Apple Silicon focuses on hardware integration, shared memory, and energy efficiency, ideal for local AI and portability.

- CUDA prioritizes raw power and hardware specialization, optimized for massive workloads and the cloud.

The aim of this article is to determine in which cases Apple Silicon can surpass CUDA, and in which situations CUDA retains a decisive advantage. We will analyze their architectures, performance, tools, limitations, and real-world use cases to provide a clear and up-to-date view in 2025.

Apple Silicon vs NVIDIA CUDA

| Criterion | Apple Silicon (M1 → M4) | NVIDIA CUDA (RTX, H100…) |

|---|---|---|

| Architecture | Integrated SoC (CPU, GPU, Neural Engine, unified memory) | CPU + dedicated GPU with separate VRAM |

| Memory | Shared, common bandwidth (up to 546 GB/s) | Dedicated VRAM, very fast (up to 1 TB/s on high-end models) |

| Raw performance | Fewer FLOPS, but optimized through integration | Maximum power in parallel computing |

| Energy efficiency | Very high, ideal for local AI | More power-hungry, optimized for data centers |

| Software ecosystem | MLX, MPS, Core ML (maturity in progress) | PyTorch/TensorFlow optimized for CUDA, mature tools |

| Strong use case | Local inference, rapid prototyping | Massive training, cloud production |

- 1. Architecture: Two Opposing Philosophies

- 2. AI Performance Comparison

- 3. Tools and Frameworks

- 4. Specific Limitations and Constraints

- 5. Use Cases and Real-World Feedback

- 6. Outlook

- Conclusion: Developing an AI Application

1. Architecture: Two Opposing Philosophies

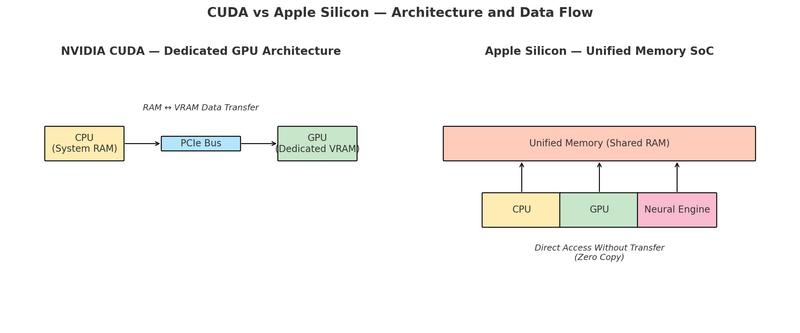

1.1. NVIDIA CUDA — Raw Power and a Mature Ecosystem

Since its creation in 2006, CUDA (Compute Unified Device Architecture) has become the de facto standard for massively parallel computing, especially in artificial intelligence and machine learning. The CUDA architecture is based on a dedicated GPU, equipped with its own high-bandwidth video memory (VRAM), connected to the central processor (CPU) via a PCI Express (PCIe) bus.

Operating Principle

- CPU and system RAM: execute general code, prepare and organize data.

- GPU and VRAM: handle massively parallel computations (matrix multiplications, convolutions, etc.).

- Communication: data must be transferred between RAM and VRAM via PCIe.

Strengths

- Raw power: high-end NVIDIA cards such as the RTX 4090 or H100 achieve computational levels in teraflops or even petaflops, with thousands of CUDA cores.

- Dedicated VRAM: large capacity (24 to 80 GB on some cards), bandwidth up to 1 TB/s.

- Software ecosystem: native compatibility and heavy optimization in PyTorch, TensorFlow, JAX, as well as specialized libraries such as cuDNN, TensorRT, NCCL, FlashAttention, or bitsandbytes.

- Scalability: ability to link multiple GPUs via NVLink to form massive training clusters.

Limitations

- CPU ↔ GPU transfers: these exchanges introduce latency, especially for workflows requiring frequent switching between CPU and GPU.

- Memory segmentation: VRAM is isolated, so a model exceeding GPU capacity requires partitioning or offloading (with performance loss).

- Energy consumption: high-end cards often consume 300 to 700 W, a key factor in operating costs and cooling requirements.

1.2. Apple Silicon — Unified Memory SoC

Apple chose a radically different approach by grouping all main components onto the same chip, a System on a Chip (SoC). The CPU, GPU, Neural Engine, matrix coprocessors AMX (Apple Matrix Extension) or SME (Scalable Matrix Extension), memory controllers, and specialized accelerators share the same physical memory space — known as the Unified Memory Architecture (UMA).

Operating Principle

- Single memory: CPU, GPU, and Neural Engine directly access the same data in RAM.

- Zero-copy: no need to transfer a tensor from CPU to GPU — it is directly accessible by all.

- Internal optimization: the system dynamically decides which unit (GPU, AMX, Neural Engine) executes a task, depending on the type of operation.

Strengths

- Energy efficiency: an M3 Max or M4 Max consumes between 40 and 80 W under heavy load while offering competitive performance for inference and prototyping.

- Software simplicity: less manual memory transfer management; cleaner and more stable code.

- High bandwidth: up to 546 GB/s (M4 Max), shared by all compute units.

- SoC versatility: tasks not purely GPU-bound can be accelerated by AMX or the Neural Engine.

Limitations

- Lower raw power: in pure computation (FLOPS), high-end NVIDIA GPUs remain far ahead, especially for large-scale training.

- GPU memory cap: the GPU can use only about 75% of system RAM (e.g., ~96 GB usable on a 128 GB Mac).

- Less mature ecosystem: although MLX, MPS, and Core ML are progressing quickly, some CUDA-optimized libraries have no direct equivalent on Apple Silicon.

In summary: CUDA and Apple Silicon embody two opposing visions. CUDA maximizes raw power with a specialized architecture, optimized for enormous computational workloads, but is power-hungry and dependent on memory transfers. Apple Silicon focuses on full integration and seamless memory access, at the cost of lower raw power but with unmatched energy efficiency and development simplicity.

2. AI Performance Comparison

2.1. Training

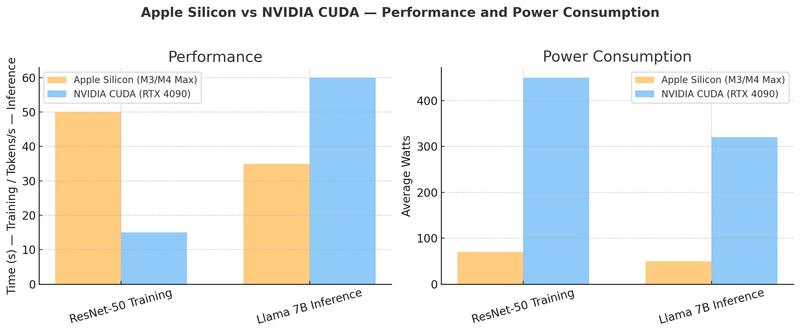

Benchmarks on standard tasks, such as training a ResNet-50 on ImageNet or medium-sized Transformer models, confirm that high-end NVIDIA GPUs retain a clear lead in raw speed.

For example:

- An RTX 4090 can complete one ResNet-50 training epoch in about 15 seconds.

- An M3 Max or M4 Max performs the same operation in 45 to 50 seconds.

This gap comes from the much higher parallel computing power of NVIDIA GPUs, combined with extremely optimized software libraries (cuDNN, TensorRT, FlashAttention, etc.).

However, energy efficiency changes the perspective.

- M3/M4 Max: consumption between 40 and 80 W under heavy load.

- RTX 4090: consumption can reach 450 W.

Thus, at equal energy usage, Apple Silicon accomplishes more work per joule spent, which can be an advantage in power- or cooling-constrained environments.

In summary:

- Choose CUDA: for large-scale training of big models requiring maximum speed and specialized libraries.

- Choose Apple Silicon: for rapid prototyping, medium-sized models, and environments where energy consumption is a key factor.

2.2. Inference

Inference, which runs an already-trained model, highlights Apple Silicon’s strengths, especially for medium to large-sized LLMs (Large Language Models).

Practical examples:

- Llama 7B: an M3 Max can generate 30 to 40 tokens per second with a quantized model, while remaining silent and energy efficient.

- Llama 13B: still smooth performance, with very low first-token latency thanks to unified memory.

- Llama 70B: possible on a Mac Studio M2 Ultra with 192 GB unified RAM, at around 8 to 12 tokens per second — something impossible on a single consumer GPU.

By comparison, CUDA still leads in absolute inference speed for massive models, but Apple Silicon stands out for running locally models that would exceed a single GPU’s VRAM capacity. Power consumption is also much lower:

- M3 Max: ~50 W during LLM generation.

- RTX 4090: often >300 W for the same task.

In summary:

- Apple Silicon excels in local inference, especially for models from 7B to 70B, offering an excellent balance of speed, consumption, and silence.

- CUDA remains preferable when maximum generation speed is critical, or for very large-scale production inference.

3. Tools and Frameworks

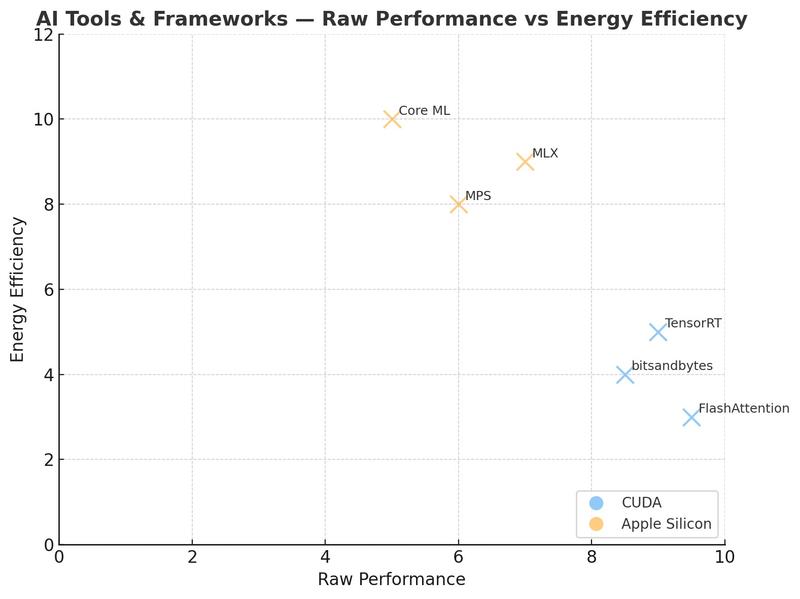

3.1. CUDA: Maturity and Extreme Optimizations

The CUDA ecosystem benefits from over fifteen years of continuous optimization and massive industry adoption. It offers a suite of specialized tools and libraries that fully exploit NVIDIA GPUs, delivering significant performance gains for both training and inference:

- FlashAttention: optimized implementation of the Transformer attention mechanism, reducing memory usage and increasing speed — particularly effective for LLMs.

- bitsandbytes: library for quantization and memory optimizations (8-bit, 4-bit), essential for handling very large models on GPUs with limited VRAM.

- TensorRT: high-performance inference engine capable of automatically optimizing models for substantial speed gains.

CUDA’s maturity comes with massive industrial support. Major cloud providers (AWS, Azure, GCP, Oracle, etc.) offer CUDA-optimized virtual machines, enabling direct production deployment. Leading frameworks such as PyTorch, TensorFlow, and JAX are optimized for CUDA first, ensuring maximum compatibility and performance.

3.2. Apple Silicon: MPS, MLX, and Core ML

Apple Silicon relies on a set of tools that, while more recent than CUDA, are evolving rapidly and leverage the architecture’s unique features.

Metal Performance Shaders (MPS)

MPS is the abstraction layer that allows frameworks such as PyTorch and JAX to run on Apple Silicon with minimal code changes. It translates standard GPU operations into optimized Metal instructions, taking advantage of unified memory and high bandwidth.

Benchmarks show that a model like ResNet-50 runs about 3× slower than on an RTX 4090, but with over 80% lower energy consumption.MLX

A native framework developed by Apple to fully exploit the SoC and its specialized units (GPU, AMX, Neural Engine).

It uses lazy evaluation to fuse and optimize operations before execution. Its NumPy-like API makes it easy to learn, and it integrates smoothly with the Python ecosystem.

Tests show that MLX is particularly effective for local language model inference, generating up to 50 tokens/s on a quantized 4-bit Llama 3B with an M3 Max.Core ML

Mainly intended for integrating models into macOS and iOS applications, Core ML makes full use of the Neural Engine for high performance and minimal power usage. Converted models benefit from automatic optimizations (quantization, operation fusion) and can achieve latencies under 5 ms for certain lightweight networks.

In summary, CUDA offers an extremely mature ecosystem designed for maximum performance and cloud scalability, while Apple Silicon focuses on tight hardware/software integration and ease of local execution, with growing potential as MPS and MLX continue to improve.

| Tool / Framework | Platform | Strengths | Limitations | Ideal Use Case |

|---|---|---|---|---|

| FlashAttention | CUDA | Major acceleration for Transformers, reduced memory, widely used for LLMs | Not available on Apple Silicon | High-performance LLM training or inference on NVIDIA GPUs |

| bitsandbytes | CUDA | 8/4-bit quantization, major memory savings, integrated into Hugging Face | No optimized MPS implementation | Loading large models on GPUs with limited VRAM |

| TensorRT | CUDA | Automatic inference optimization, very fast | Limited to NVIDIA | High-performance deployment in NVIDIA cloud or edge environments |

| MPS (Metal Performance Shaders) | Apple Silicon | PyTorch/JAX compatibility, zero-copy memory, good energy efficiency | Slower than CUDA for large training, some ops unsupported | Prototyping, light to medium training, local inference |

| MLX | Apple Silicon | Native optimized framework, lazy evaluation, NumPy-like API, excellent LLM perf | Young ecosystem, fewer third-party tools | Optimized local inference, light fine-tuning on Mac |

| Core ML | Apple Silicon | Uses Neural Engine, automatic optimizations, extremely low power usage | Requires prior model conversion, less flexible for R&D | Deployment in macOS/iOS apps with real-time inference |

4. Specific Limitations and Constraints

Despite its strengths, Apple Silicon has some limitations that are important to understand before committing to an AI project on this platform. These constraints stem from both the hardware architecture and the software ecosystem.

4.1. Containerization and Metal GPU Access

Using containers, especially via Docker, remains problematic for GPU utilization on Apple Silicon. Metal, Apple’s graphics and compute API, requires direct hardware access, which Linux containers running in a virtual machine cannot obtain.

In practice, this means a container cannot take advantage of Apple Silicon’s GPU or Neural Engine. Development environments must therefore often run natively on macOS to benefit from hardware acceleration — which can create a mismatch with production if it runs on Linux with CUDA.

4.2. Neural Engine as a Black Box

The Neural Engine is a highly efficient specialized accelerator, but its workings remain closed. Unlike CUDA, which allows custom kernel development, Apple does not provide direct access to this component. Developers must use Core ML or compatible APIs, limiting flexibility and making certain optimizations impossible. This approach ensures stability and security but can slow innovation in advanced research scenarios.

4.3. Partial Incompatibility with Certain Tools

Although PyTorch and JAX are compatible via MPS, some essential libraries in the CUDA ecosystem still have no equivalent on Apple Silicon.

Notable examples include:

- FlashAttention (optimized attention)

- bitsandbytes (8/4-bit quantization)

- Certain accelerated implementations in xFormers

In some cases, frameworks fall back to slower CPU implementations, resulting in significant performance loss.

Hugging Face Transformers on Apple Silicon

1. Incomplete MPS coverage and CPU fallbacks

The MPS backend (PyTorch on Metal) does not yet implement all operations. The official documentation recommends enabling CPU fallback via PYTORCH_ENABLE_MPS_FALLBACK=1; moreover, distributed training is not supported on MPS.

2. Attention stability/performance

Recent reports mention issues with scaled_dot_product_attention (SDPA) that can cause crashes on macOS/Apple Silicon, and memory regressions in MPS have been tracked in PyTorch in 2025. In practice, many users force the “eager” attention implementation in Transformers to avoid unoptimized code paths.

3. No direct equivalents for certain CUDA accelerations

Transformers on Apple Silicon does not benefit from FlashAttention, the xFormers attention/SDPA kernels, or bitsandbytes (8/4-bit quantization) — the latter has no MPS support and is only activated if torch.cuda.is_available() is true. This results in lower throughput and higher memory usage than CUDA for the same models.

- Any wizard could make Flash Attention to work with Apple …

- Accelerate and bitsandbytes is needed to install but I did

- M1.M2 MacOS Users · Issue #485 · bitsandbytes- …

4. Stabilization in progress… but alternatives recommended for inference

Apple and PyTorch are steadily improving MPS (attention optimizations, quantization, etc.), but for local LLM inference, MLX and dedicated runtimes (llama.cpp, Ollama) are often faster and more efficient on Mac. Hugging Face now documents MLX usage and hosts models in the MLX format.

Minimal Best Practices (Transformers + MPS)

- Set

PYTORCH_ENABLE_MPS_FALLBACK=1to avoid missing-operation errors; ensure the device is set tomps. - Force

model.config.attn_implementation="eager"when optimized attention causes issues (recommendation based on SDPA/MPS field reports). - Avoid CUDA-only dependencies (FlashAttention, bitsandbytes) in a Mac-targeted pipeline; consider MLX/GGUF for local quantized inference.

4.4. GPU Memory Limitation

On Apple Silicon, the GPU cannot use more than about 75% of the system’s total memory. For example, a Mac with 128 GB of RAM can only use around 96 GB for GPU tasks.

This restriction is designed to preserve system stability but can be problematic for particularly large models. Quantization or model compression techniques then become essential to work around this limit.

In summary, Apple Silicon provides a powerful and integrated environment, but these constraints must be taken into account from the start of project design. They directly influence the choice of tools, software architecture, and compatibility with traditional production environments.

How to Work Around These Constraints

1. Containers and GPU

- Perform GPU-dependent development directly on macOS, reserving Docker for ancillary services (APIs, databases).

- Try OrbStack or Colima for smoother ARM environments than Docker Desktop (but still without GPU access).

2. Neural Engine

- Convert models to Core ML to take advantage of the Neural Engine.

- Favor architectures already optimized (Transformers, common CNNs) to benefit from automatic accelerations.

3. Library Incompatibilities

- Use MPS-compatible alternatives (e.g.,

mlx_lm,llama.cpp, Ollama) for LLM inference. - Avoid critical dependencies on CUDA-only components during the project design phase.

4. GPU Memory Limit

- Use quantization (4-bit or 8-bit) to reduce memory footprint.

- Load models in “lazy” mode or in segments when possible.

- Plan for machines with larger unified RAM (96 to 192 GB) for large models.

5. Use Cases and Real-World Feedback

5.1. Apple Intelligence and Private Cloud Compute

Apple puts its own Apple Silicon technologies into large-scale practice with Apple Intelligence, introduced in iOS 18 and macOS Sequoia.

The models used on devices are optimized to run entirely locally via the Neural Engine and integrated GPU, ensuring both privacy and low latency.

For requests requiring larger models, Apple relies on Private Cloud Compute, a server infrastructure built on custom Apple Silicon chips. This architecture preserves the same principles as local execution — security, encryption, and no personal data collection — while providing the power needed for more complex processing.

5.2. Video Studios and Creative Production

Several post-production and video creation studios now use Mac Studio or Mac Pro systems powered by M2 Ultra or M3 Ultra chips to integrate AI tasks into their workflows.

Concrete examples include:

- Video upscaling with tools such as Topaz Video AI.

- Generation and retouching of visual effects.

- Real-time image segmentation or analysis for editing and color grading.

Reported benefits from professionals include up to 4× lower power consumption compared to a typical GPU workstation, near-silent operation in workspaces, and the ability to load into memory models too large for a consumer GPU.

5.3. Medical Research and Image Analysis

In the medical field, some teams use Apple Silicon for diagnostic image analysis (X-rays, MRIs, CT scans) directly within local tools.

The unified memory architecture makes it possible to load complex segmentation models entirely in RAM, enabling fast and smooth processing even on workstations outside data centers.

This approach is particularly valued in clinical environments, where silence, low power consumption, and data security are top priorities.

5.4. Open Source Community and Local Tools

The open source community has quickly embraced Apple Silicon through projects optimized for macOS:

- Ollama: runs various language models locally with a simple installation process.

- llama.cpp: optimized C++ execution of LLMs with Metal support.

- MLX: Apple’s official library, enriched with many pre-quantized models available on Hugging Face.

These initiatives make it easier to access models with billions of parameters on a Mac, without dedicated GPU infrastructure.

4-bit and 8-bit quantized models reduce memory requirements while maintaining quality close to the original, making it possible to run models from 7B to 70B directly on a Mac with sufficient unified RAM.

6. Outlook

6.1. Apple Silicon Roadmap

Apple is reportedly working on an M5 chip expected before the end of 2025, featuring Transformer-specific coprocessors to significantly boost LLM performance while maintaining very high energy efficiency.

In addition, the company is developing its own server solutions (used in Private Cloud Compute) to reduce reliance on NVIDIA GPUs in its data centers.

6.2. Apple Container and GPU Access in Containers

Apple is introducing a new approach to native containerization, aiming to offer more isolated, faster environments with better macOS integration. However, the question of GPU access (via Metal) in these containers still has no official solution.

As of now, standard Docker containers on macOS cannot use the GPU; torch.backends.mps.is_available() always returns False inside a container (Stack Overflow).

That said, experimental progress with Podman (via libkrun and a virtio-gpu device) now allows Vulkan calls from a container to be redirected to the host system’s GPU. While this comes with some overhead compared to native execution, it still provides a tangible gain over CPU-only execution (Red Hat Developer).

6.3. ARM in AI: A Growing Ecosystem

The rise of ARM architecture for AI is a global trend. Ambitious initiatives are underway from:

- Qualcomm, with its Snapdragon X chips for PCs and AI server projects.

- Ampere Computing, already present in Azure, Oracle, and other cloud providers.

- Huawei and Xiaomi, developing their own ARM SoCs in China to reduce dependence on foreign technology.

This trend reinforces the idea that AI is no longer the sole domain of GPUs, and that integrated, efficient, low-power architectures have a crucial role to play — particularly in edge computing.

6.4. Evolution of Frameworks and Tools

The software landscape around Apple Silicon is rapidly maturing:

- MLX is quickly gaining advanced quantization (GPTQ, AWQ) and built-in profiling tools.

- MPS and Core ML are gradually strengthening their support for PyTorch and JAX.

- Open-source projects such as llama.cpp and Ollama are improving their support, ensuring robust performance even outside CUDA environments.

In summary, Apple Container is promising for localized workflows, but GPU access from within a container remains limited for now. The future looks encouraging, with Podman already offering an effective solution via libkrun. The ARM ecosystem, driven by Apple and other players, continues to take shape, and software frameworks are becoming increasingly relevant for AI workloads on Mac.

Conclusion: Developing an AI Application

When it comes to designing, testing, and delivering an AI application, Apple Silicon and NVIDIA CUDA address distinct needs:

- Apple excels at local work: unified memory, silent operation under load, and energy efficiency provide a smooth environment for prototyping, refining user experience, and deploying macOS/iOS apps with Core ML or MLX, while keeping data processing on-device for privacy.

- Conversely, CUDA remains the industry standard for building large-scale backends: its tool-rich ecosystem (TensorRT, Triton, multi-GPU) and cloud compatibility make it the reference when scalability, availability, and maximum performance are priorities.

| Product Dimension | Apple Silicon Advantage | CUDA Advantage |

|---|---|---|

| Local prototyping & iteration | ✅ (speed, unified memory, silence) | |

| macOS/iOS client apps (on-device) | ✅ (Core ML / MLX, privacy) | |

| Large-scale backends/APIs | ✅ (TensorRT, Triton, multi-GPU) | |

| Ecosystem & library compatibility | ✅ (Transformers + FlashAttention, bitsandbytes…) | |

| Energy efficiency (workstation/edge) | ✅ | |

| MLOps & cloud readiness | ✅ (standards, images, GPU servers) | |

| Containerization with GPU access | (still limited on Mac, Apple Container in progress) | ✅ (mature) |

| Local privacy & compliance | ✅ (on-device processing) |

Apple Silicon is not a full replacement for CUDA, but can be a strategic asset in a hybrid AI architecture.

For a product team, the most effective approach is to prototype and fine-tune the experience on Apple Silicon, then industrialize and scale on CUDA when the application must meet demanding SLAs.

A Note on the macOS (dev) / Linux (prod) Gap

At present, developing on macOS and deploying to Linux servers is not optimal and exposes several notable challenges:

- Tooling and library gaps: major CUDA-side optimizations (e.g., FlashAttention, bitsandbytes, specialized kernels) have no direct equivalent on MPS/Metal, making performance and behavior parity harder to achieve.

- Architecture differences: arm64 on Mac vs x86_64 in production leads to variations in dependencies, binary wheels, and sometimes numerics, with a risk of subtle divergences between environments.

- Containerization: GPU access in containers on macOS remains limited; CI/CD pipelines faithfully reproducing production GPU execution are harder to implement in the dev environment.

- Model formats and portability: Apple-oriented artifacts (Core ML/MLX) do not always translate directly to production toolchains (TensorRT/ONNX), and vice versa — adding conversion and validation steps.

- Observability and profiling: profiling and tracing tools differ (Xcode/Metal vs Nsight/cu*), making diagnostics less comparable between dev and prod.

In 2025–2026, several areas merit close monitoring:

- Maturity of MLX and MPS: additional Transformer operator coverage, profiling tools, and quantization could narrow the functional gap with CUDA.

- The evolution of Apple Container and GPU access in isolated environments will be key for consistent CI/CD chains between Macs in dev and Linux servers in prod.

- GPU availability and cost, along with alternatives (ROCm, Gaudi, ARM on the server side), may influence architectural choices.